SENSITIVITY AND POWER ANALYSES

In our paper “Speciation with gene flow in North American Myotis bats”, we explored how the probability of each model changes as more data (loci) were analyzed. We did not analized our data hundreds of times in subests of loci! We analized all the data at ones (one run per model) and then calculated model averages in sets on loci.

First, analyze all your data (as described in the RUNNING PHRAPL section). Be sure to used the option print.matches=TRUE the GridSearch function.

#############################################

### Analyze all the data using GridSearch ###

# Be sure to set print.matches=TRUE

result<-GridSearch(...,print.matches=TRUE)

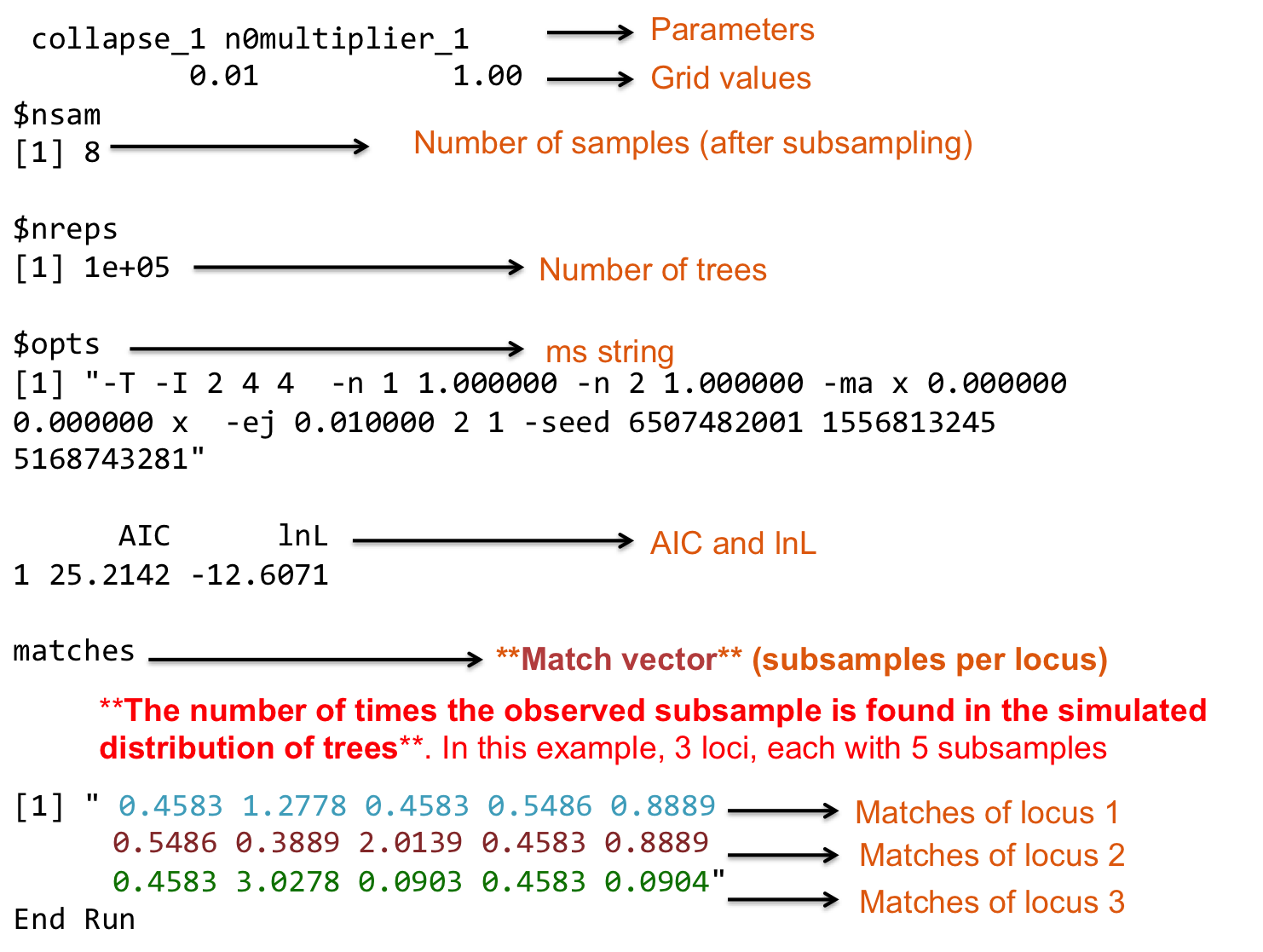

Each run will generate two files, one with extension rda and one with extension Rout. The rda files will be used to post-process the results and calculate final model averages. The Rout files will contain information of the GridSearch performance, we are interested in the match vector, which contains information of the number of times that each subsample in each locus was found in the simulated distribution of trees.

This figure represents a GridSearch output listing parameter grids, grid values (or grid points), running settings (such as nsam, nreps, opts), results (AIC and lnL), and the number of matches. There will be one list per model and per grid parameter combination.

Before recalculating model averages per locus (or subset of loci), it is necessary to extract the match vectors:

#######################################################################################

### Create a bash script to extract match vector from Rout files (from phrapl runs) ###

# Example of command line per model

# grep matches -A1 script_808loci_subs200_1_5.Rout | grep -v matches | grep 0 > matches_1_5.txt

# Set working directory (location of Rout files)

setwd("/path_to_Rout_files") #--------> This is the only line you have to change in this script!

RoutFiles<-list.files(getwd(), pattern="*.Rout", full.names=FALSE)

RoutFilesSplited<-strsplit(RoutFiles, "_")

# Create a command line per file

grep_command<-c()

for(model in 1:length(RoutFiles)){

matches<-paste("grep matches -A1 ",RoutFiles[model]," | grep -v matches | grep 0 > matches_",RoutFilesSplited[[model]][3],".txt", sep="")

grep_command<-append(grep_command,c(matches))

}

# Save bash script and execute it

write(grep_command, file="extractRout_matchesVector.sh")

system("sh extractRout_matchesVector.sh") # Run bash script

Then, the function GenerateSetLoci will re-calculate AICs and parameter estimates for subsets of loci. This can either be done for independent subsets of loci (cumulative=FALSE) or for accumulating subsets of loci (cumulative=TRUE, in which the second subset is added to the first subset, and then the third subset is added to these, etc). lociRange gives the number of loci in the original analysis and NintervalLoci gives the desired number of loci in each subset, which must be a multiple of the total number of loci. If the models in the original analysis are only a subset of models in the migrationArray, this modelRange must be specified as well. Output of this function is an rda file for each locus subset (numbered in order with a numerical suffix, i.e., _1, _2, _3, etc). Note that subsets are taken in order from the original output files.

Note: GenerateSetLoci is still being modified to make it more amicable. Here is one of the latest versions that you can download and load as a source file.

# Set working Directory

setwd("/your_working_directory/subsets") # Advice: create a folder "subsets" where the new RDA output files will be saved

# Load phrapl

library(phrapl)

# Load phrapl input (subsampled dataset)

load('/path_to_input/phraplInput.rda')

# Load `migrationArray` (set of models) file

load('/path_to_migrationArray/MigrationArray_3Pop_3K.rda')

#Load GenerateSetLoci function (if not available)

source('/path_to_function/GenerateSetLoci_cum8_v3.R')

###########################

#### Define arguments ###

nloci<-808 # Total number of loci

NintervalLoci<-8 # Number of loci per subset

modelRange<-1 # The model(s) that will be analyzed

migrationArrayShort<-migrationArray[modelRange]

lociRange<-c(1:808) # The loci range that will be included in the subsets

subsamplesPerGene<-200 # Same value used in subsampling

popAssignments<-list(c(2, 2, 2)) # Number of individuals that were subsampled per population (SAME used in GridSearch)

collapseStarts<-c(0.3, 0.58, 1.11, 2.12, 4.07, 7.81, 15) # SAME grid values used in GridSearch

migrationStarts<-c(0.1, 0.22, 0.46, 1, 2.15, 4.64) # SAME grid values used in GridSearch

n0multiplierStarts<-NULL # SAME grid values used in GridSearch

setCollapseZero<-NULL # SAME grid values used in GridSearch

nTrees<-1e+05 # Number of simulated trees (SAME used in GridSearch)

subsampleWeightsVec=subsampleWeights.df[[modelRange]][,1] # SubsampleWeights (SAME used in GridSearch)

#####################################

### Load files with match vectors ###

rdaFilename<-'/path_to_RDA_output_files_for_a_given_model/phrapl_out_sub200_model1.rda'

RoutFilename<-read.table(file='/path_to_modeloutputVector_files_for_a_given_model/matches_model1.Rout.txt',skip=1)

#####################################################

### Recalculate model averages in subsets of loci ###

# Command line to run function GenerateSetLoci

GenerateSetLoci(lociRange=lociRange,NintervalLoci=NintervalLoci,RoutFilename,rdaFilename,migrationArr

ay=migrationArray,modelRange=modelRange,subsamplesPerGene=subsamplesPerGene,collapseStarts=collapseSt

arts,migrationStarts=migrationStarts,n0multiplierStarts=n0multiplierStarts,setCollapseZero=setCollaps

eZero,cumulative=TRUE,nTrees=nTrees,dAIC.cutoff=2,nEq=nEq, subsampleWeightsVec=subsampleWeightsVec)

Finally, it will be necessary to calculate model averages as describe in POST-PROCESSING. It would be useful to write a loop to post-process all subsets per model at ones. Here is an example:

#########################################

### Postprocess RDA files per subset ###

# Load `migrationArray` (set of models) file

load('/path_to_migrationArray/MigrationArray_3Pop_3K.rda')

# Set paths to input and output files

pathSubsets<-"/path_to_subsets_RDA_files/" # Files created by GenerateSetLoci. Do not forget "/" at the end

pathtotalData_subsets<-"/path_to_output_totalData_files_by_subsets/" # Do not forget "/" at the end

# Loop to analyze output files ----> Will generate a totalData file per subset per model

# You may need to create one folder per subset and move files to each folder

for(i in 1:101){ ## ------> Be careful to specify the total number of subsets (in this case 101)

totalData<-list()

totalData<-ConcatenateResults(rdaFilesPath=paste(pathSubsets,"subset",i,"/",sep=""),rdaFiles=NULL, migrationArray, rm.n0=TRUE, longNames = TRUE, outFile=NULL,addAICweights=TRUE,addTime.elapsed=FALSE, nonparmCols = 4)

#modelAverages<-CalculateModelAverages(totalData,parmStartCol=9)

write.table(totalData, file=paste(pathtotalData_subsets,"totalData_subset",i,".txt",sep=""), sep="\t", row.names=FALSE)

}

The goal is to obtain one totalData file per subset that will contain AIC, wAIC and lnL values per set of loci. With a couple of loops, you can extract that information. This is how we summarize that information for Myotis bats:

Fig. 2b from Morales et al. (2016)

Fig. 2b from Morales et al. (2016)